Well, I’ll be lying if I haven’t heard of containers in the past years although I used them barely at my job (solutions engineer) shouldn’t I consider them more every time to replace the applications where my customers have their servers?

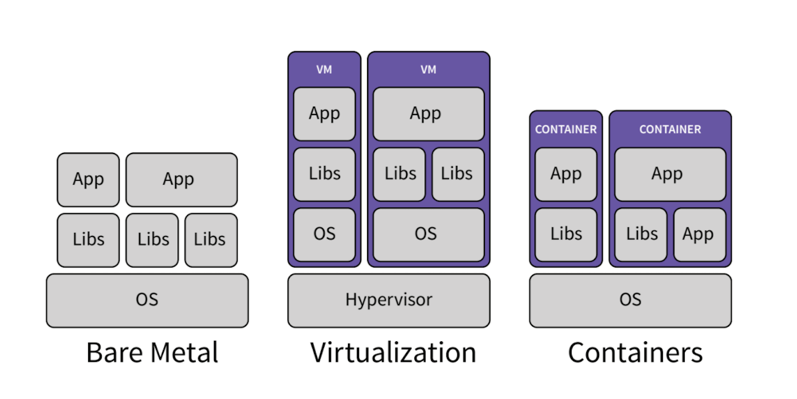

Like many people that work as sysadmin these days, they are used to work with virtualization, in particular Virtual Machines (VMs), customers still using them (and it will continue) to deploy their applications in an OS which delivers great benefits against the legacy approach of the Baremetal (Mainframe) era.

Although there are other ways to deploy your applications (always depends on your application but we are taking a general approach), containers are always in the mind of Cx0 people because of their advantages against virtualization and the trend that has become in the past years.

But, which is the correct approach for an application? As always, it depends but I am going to talk about the technologies used now and the trend that I can see.

Talking about virtualization

For many years, virtual machines have been the way to go to deploy applications within servers. You seize the hardware by running an «OS» (hypervisor) and inside of it, you run your VMs where you can assign virtual resources as you desire.

This has been (and it continues) to be the first approach for many new companies as it’s now quite standardized.

In my opinion, I think that VMware is the most famous provider by offering their Hypervisor ESXi, which has proved to be the standard for VMs.

I am not going to dive in on this as you can search for more information on Google (or whatever search engine you like to use).

Talking a bit about containers

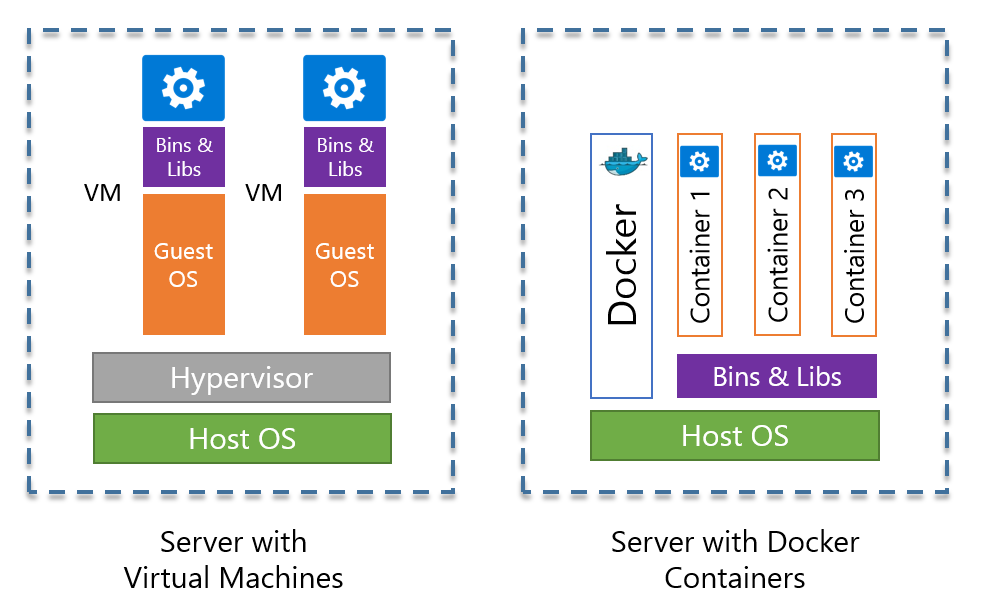

It’s known that the most famous container runtime is Docker although Podman seems to be the direct rival (perspective only from my understanding, which is little)

Also, the benefits of segmenting your applications on different services (containers) will normally let you escalate, perform, etc. better than running it on VMs

The principal benefit of running services in containers is that you have a single OS where each container runs on it. All dependencies for the application you are deploying in the container are «in the OS» and this isolation is managed by the container runtime (Docker in the image that you see below):

Is there a mix? Well…

The main problem I saw the first time when I saw how the container runtime works is, how «isolated» is each container from each other? What about a security breach in the OS?

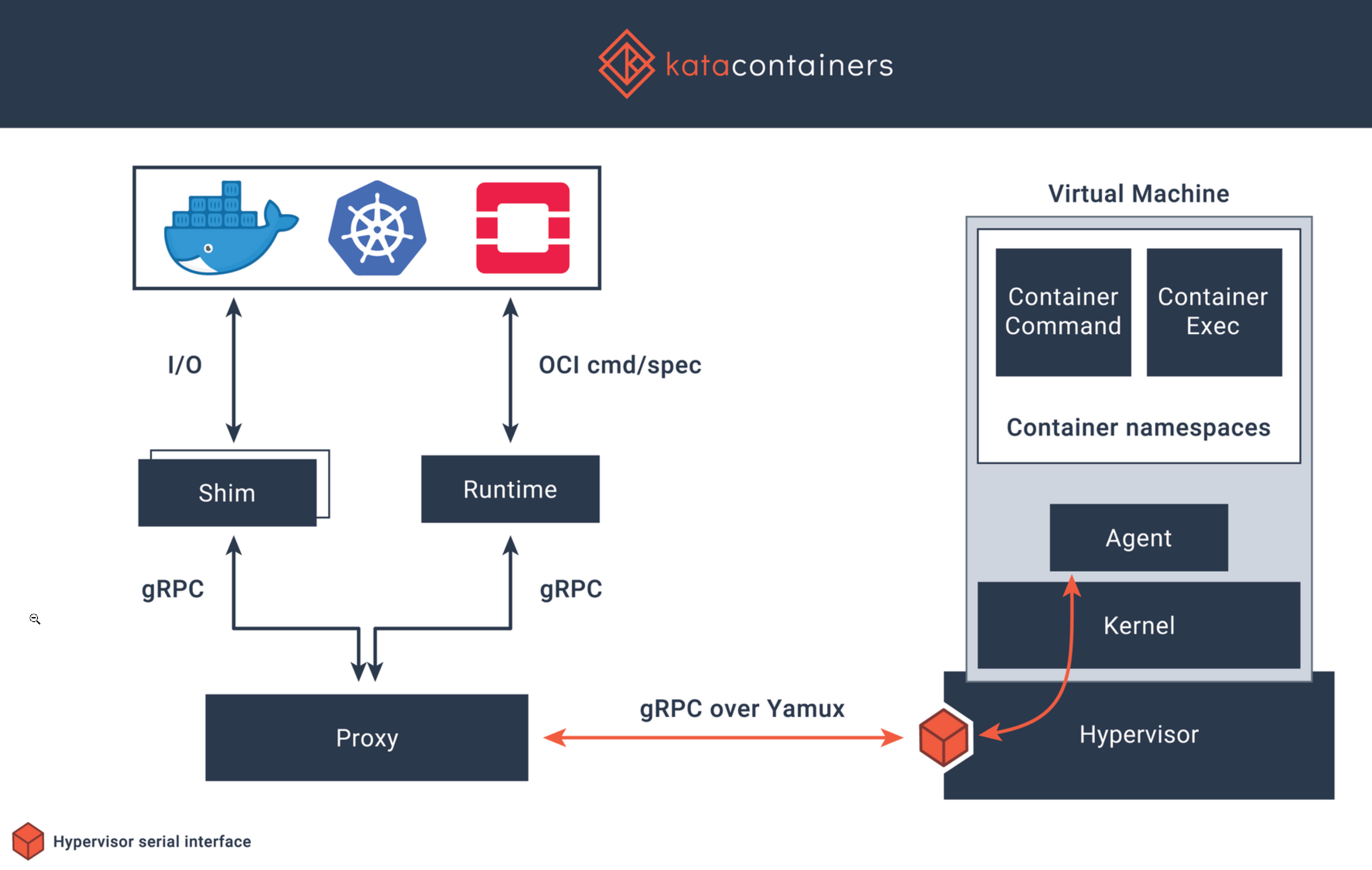

And then it’s when I found a mix of both worlds (which I really need to dig into it).

Kata Containers, a promising Open Source project where it combines both worlds and trying to deliver the best features from both technologies.

By running a dedicated kernel (part of the operating system) you provide isolation from many resource perspectives like network, memory, and I/O for example without the performance disadvantatges that virtual machines have.

Removes the necessity of nesting containers in virtual machines (which I do like in some environments to provide the isolation that a container runtime can provide).

Obviously not everything is good and it has some limitations like Networking, Host Resource and more. You can find them here

Summary

We don’t know the future but for sure containers are still a trend for many years.

You can see that even VMware embedded Kubernetes (Container orchestrator) in their products.

So it will be everything containers, a mix of containers and VMs or something like Kata Containers could be the next thing?

We will see, for now, let me research more in this last open-source project and see how it really delivers!